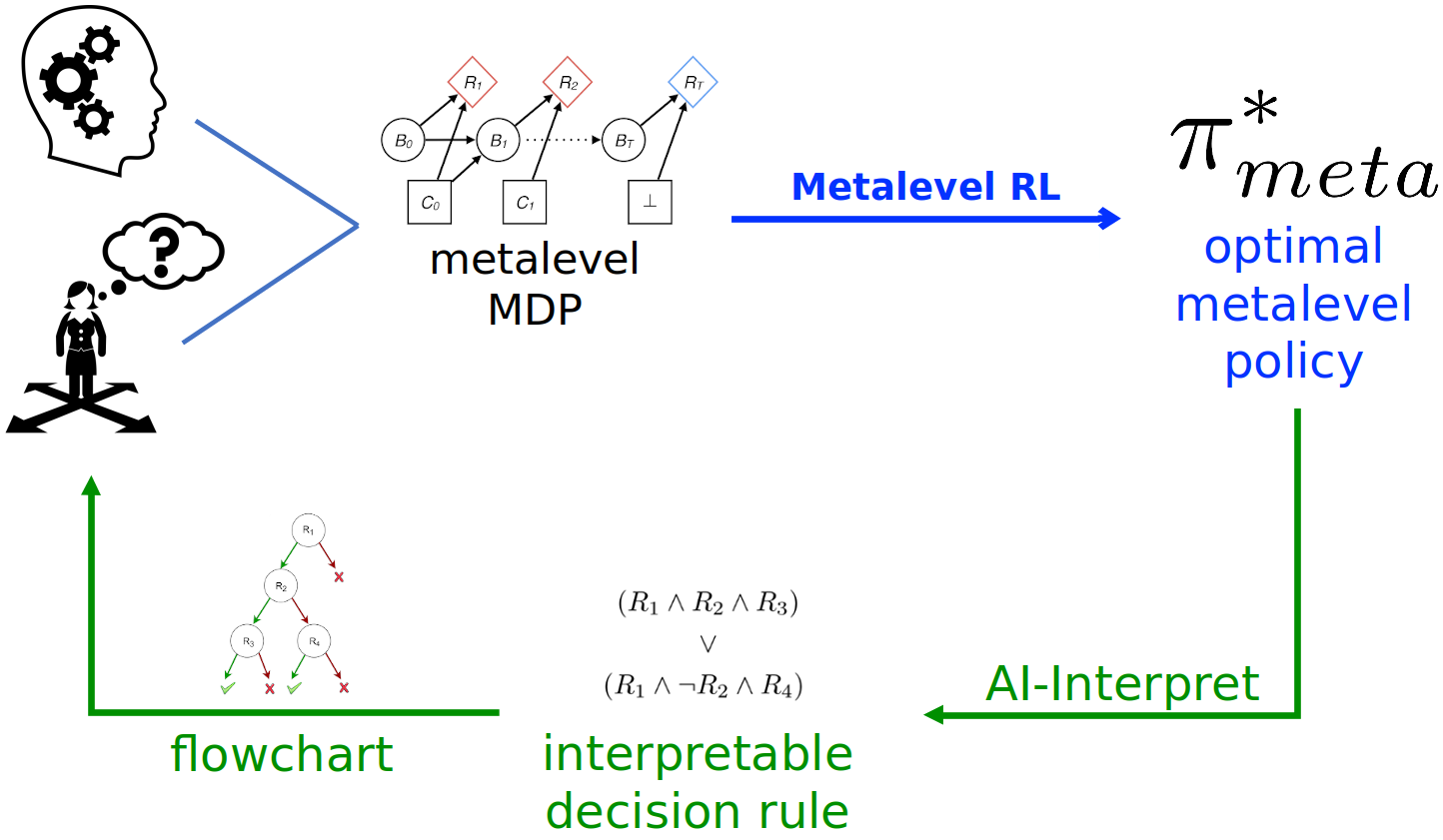

In this project, we are interested in generating efficient and interpretable descriptions of heuristics and general principles for good decision-making discovered through our strategy discovery method. As the first step to that end, we introduced a new Bayesian imitation learning algorithm called AI-Interpret (Skirzynski, Becker, & Lieder, 2020). Our algorithm employs a new clustering method and identifies a large subset of demonstrations that can be accurately described by a simple, high-performing decision rule. Experimental studies showed that transforming descriptions obtained with AI-Interpret into flowcharts improves human understanding and performance in a series of decision tasks.

Motivated by these results, we currently focus on three aspects of interpretable strategy discovery generally associated with either Psychology, Computer Science, or Cognitive Science. In the domain of Psychology, we study the interpretability of procedural descriptions as alternative forms of representing complex strategies. We also investigate other features of decision strategy’s descriptions such as determinism, hierarchy, expressiveness, or support of demonstrations. Considering the domain of Computer Science, we work on introducing temporal logic into the existing framework of AI-Interpret. We also work on creating algorithms that generate hierarchical, procedural descriptions, and actively seek how to combine all the features we find crucial for interpretability in one method. Our research in Cognitive Science investigates if we can use the introduced tools to understand and decipher complex and sometimes erratic planning behavior of humans.

In the future, we aim to research how the strategies and principles that we discover transfer to real-life problems.