In this project, we are interested in designing planning strategy discovery algorithms that are effective in large and complex environments. AI has yet to catch up to the computational efficiency of human planning. Research in cognitive science and neuroscience suggests that the brain decomposes long-term planning into goal-setting and planning at multiple hierarchically-nested timescales (Carver and Scheier 2001, Botvinick 2008).

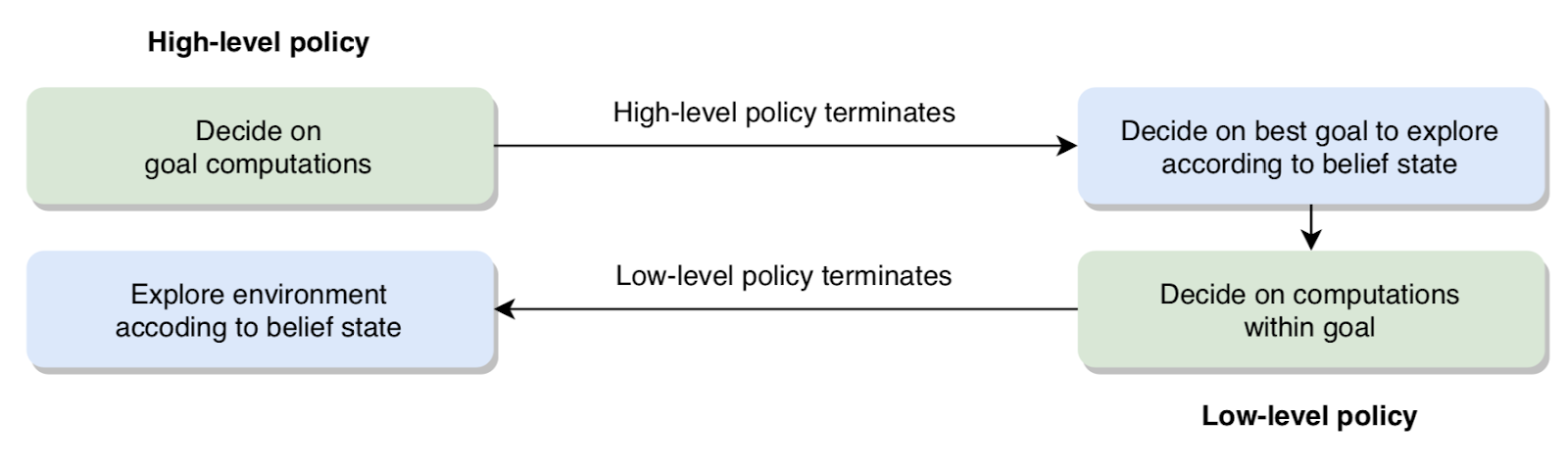

Inspired by this, we built on strategy discovery algorithms leveraging metalevel reinforcement learning (Callaway et al. 2018) and rational metareasoning (Russell and Wefald, 1991) to find the optimal planning strategy in the environment by incorporating hierarchical structure into the space of possible planning strategies. Concretely, the planning task is decomposed into first selecting one of the possible final destinations as a goal solely based on its own value and then planning the path to this selected goal. We have evaluated the performance of our algorithm on the Mouselab-MDP environment (Callaway et al. 2017).

Our research also investigates the improvement in human performance by teaching strategies with a hierarchical structure.

In the future, our research will focus on designing algorithms capable of efficiently planning in environments with noisy observations. These environments are more realistic and more challenging to solve.